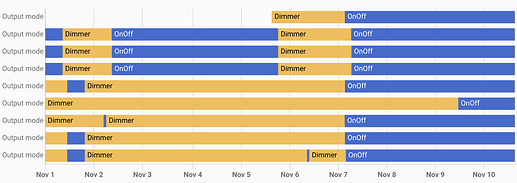

I am still having this issue and realized that in addition to the switch_mode described above, it also applies to the switch_type parameter (#22) for any of the switches that are set to 0x03, a.k.a. Single Pole Full Sine which gets reset to the default of Single Pole. (And perhaps to 0x01 and 0x02 as well, but I don’t have any switches configured with those settings at the moment.)

These seem to change back to their default values more frequently after a restart of Home Assistant. (Part of the reason here could be that the ZHA initialization sequence is reading these attributes when initializing instead of just getting a cached value. So it updates right after startup even though it may have changed sometime before & just never been reported back to the coordinator/ZHA/Home Assistant. Is this all suggesting a bug somewhere in the switch firmware?)

I enabled ZHA logging and found that my older switches are getting the v12 quirks due to not having the endpoint #3:

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks.registry] Checking quirks for Inovelli VZM31-SN (8a:7f:12:72:ae:21:b2:13)

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SN'>

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {1, 2, 242}

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv9'>

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {1, 2, 242}

2025-03-30 16:03:30.106 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv10'>

2025-03-30 16:03:30.107 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {1, 2, 242}

2025-03-30 16:03:30.107 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv11'>

2025-03-30 16:03:30.108 DEBUG (MainThread) [zigpy.quirks] Fail because input cluster mismatch on at least one endpoint

2025-03-30 16:03:30.108 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv12'>

2025-03-30 16:03:30.108 DEBUG (MainThread) [zigpy.quirks] Device matches filter signature - device ieee[8a:7f:12:72:ae:21:b2:13]: filter signature[{

'models_info': [('Inovelli', 'VZM31-SN')],

'endpoints': {

1: {

'profile_id': 260,

'device_type': <DeviceType.DIMMABLE_LIGHT: 257>,

'input_clusters': [0, 3, 4, 5, 6, 8, 1794, 2820, 2821, 64561, 64599],

'output_clusters': [25]

},

2: {

'profile_id': 260,

'device_type': <DeviceType.DIMMER_SWITCH: 260>,

'input_clusters': [0, 3, 4, 5],

'output_clusters': [3, 6, 8, 64561]},

242: {

'profile_id': 41440,

'device_type': <DeviceType.PROXY_BASIC: 97>,

'input_clusters': [],

'output_clusters': [33]

}

}

}]

2025-03-30 16:03:30.108 DEBUG (MainThread) [zigpy.quirks.registry] Found custom device replacement for 8a:7f:12:72:ae:21:b2:13: <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv12'>

And my newer switches are getting v13 because they do define endpoint #3:

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks.registry] Checking quirks for Inovelli VZM31-SN (8e:b1:33:f1:cf:c9:35:8a)

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SN'>

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {242, 1, 2, 3}

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv9'>

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {242, 1, 2, 3}

2025-03-30 16:03:30.230 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv10'>

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2} {242, 1, 2, 3}

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv11'>

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2, 242} {242, 1, 2, 3}

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv12'>

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks] Fail because endpoint list mismatch: {1, 2, 242} {242, 1, 2, 3}

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks.registry] Considering <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv13'>

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks] Device matches filter signature - device ieee[8e:b1:33:f1:cf:c9:35:8a]: filter signature[{

'models_info': [('Inovelli', 'VZM31-SN')],

'endpoints': {

1: {

'profile_id': 260,

'device_type': <DeviceType.DIMMABLE_LIGHT: 257>,

'input_clusters': [0, 3, 4, 5, 6, 8, 1794, 2820, 2821, 64561, 64599],

'output_clusters': [25]

},

2: {

'profile_id': 260,

'device_type': <DeviceType.DIMMER_SWITCH: 260>,

'input_clusters': [0, 3, 4, 5],

'output_clusters': [3, 6, 8, 64561]},

3: {

'profile_id': 260,

'device_type': <DeviceType.DIMMER_SWITCH: 260>,

'input_clusters': [0, 3, 4, 5],

'output_clusters': [3, 6, 8, 64561]

},

242: {

'profile_id': 41440,

'device_type': <DeviceType.PROXY_BASIC: 97>,

'input_clusters': [],

'output_clusters': [33]

}

}

}]

2025-03-30 16:03:30.231 DEBUG (MainThread) [zigpy.quirks.registry] Found custom device replacement for 8e:b1:33:f1:cf:c9:35:8a: <class 'zhaquirks.inovelli.VZM31SN.InovelliVZM31SNv13'>

Both the old and new switches are on the same firmware version, v2.18 / 0x01020212. Shouldn’t the older switches also have the new endpoint #3 as well?